[ad_1]

The spirit of software program pioneer Grace Hopper will dwell on at NVIDIA GTC.

Accelerated programs utilizing highly effective processors — named in honor of the pioneer of software program programming — shall be on show on the world AI convention operating March 18-21, able to take computing to the subsequent stage.

System makers will present greater than 500 servers in a number of configurations throughout 18 racks, all packing NVIDIA GH200 Grace Hopper Superchips. They’ll kind the biggest show at NVIDIA’s sales space within the San Jose Conference Heart, filling the MGX Pavilion.

MGX Speeds Time to Market

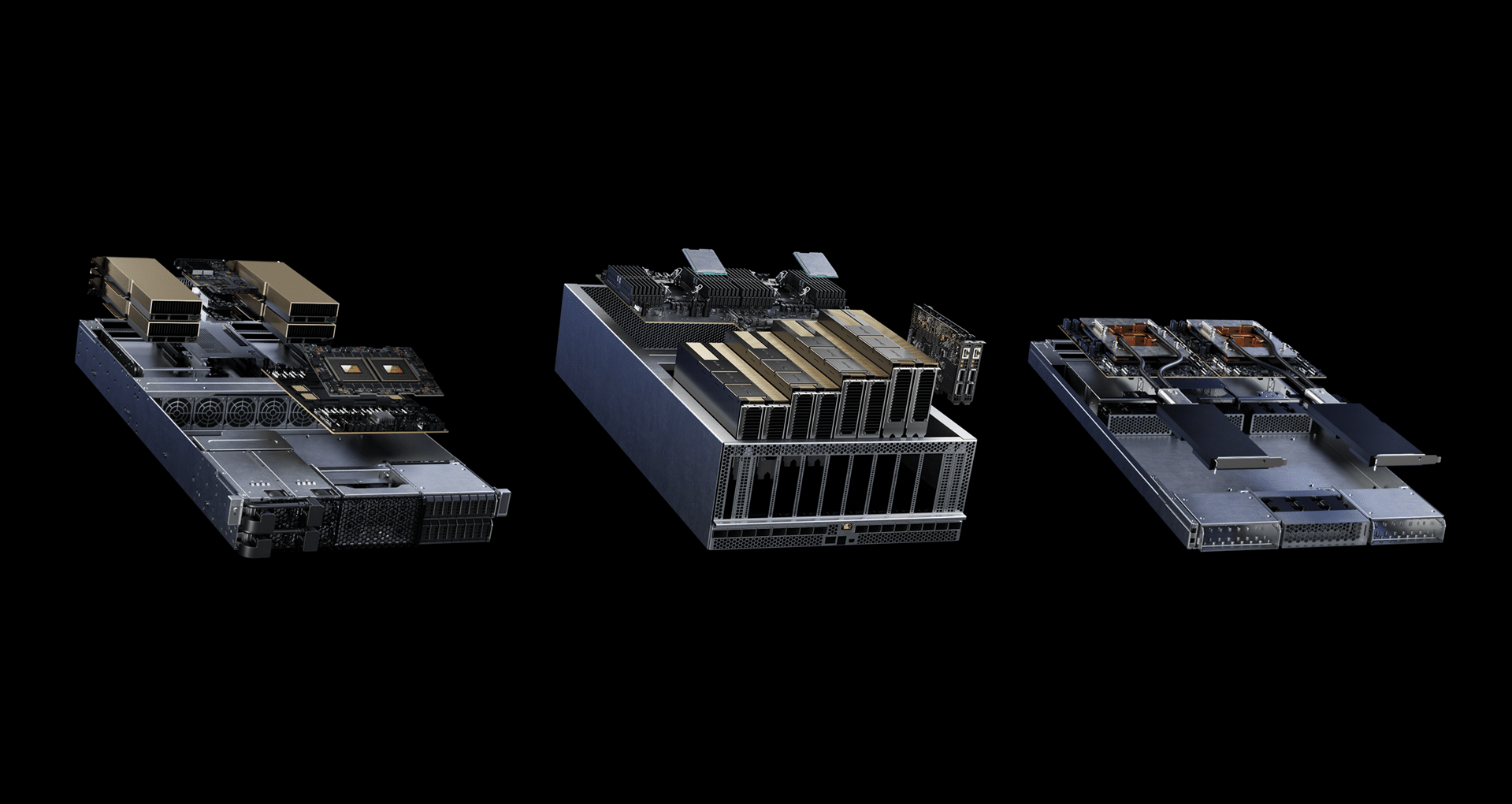

NVIDIA MGX is a blueprint for constructing accelerated servers with any mixture of GPUs, CPUs and information processing items (DPUs) for a variety of AI, excessive efficiency computing and NVIDIA Omniverse purposes. It’s a modular reference structure to be used throughout a number of product generations and workloads.

GTC attendees can get an up-close have a look at MGX fashions tailor-made for enterprise, cloud and telco-edge makes use of, resembling generative AI inference, recommenders and information analytics.

The pavilion will showcase accelerated programs packing single and twin GH200 Superchips in 1U and 2U chassis, linked by way of NVIDIA BlueField-3 DPUs and NVIDIA Quantum-2 400Gb/s InfiniBand networks over LinkX cables and transceivers.

The programs assist trade requirements for 19- and 21-inch rack enclosures, and plenty of present E1.S bays for nonvolatile storage.

Grace Hopper within the Highlight

Right here’s a sampler of MGX programs now accessible:

- ASRock RACK’s MECAI, measuring 450 x 445 x 87mm, accelerates AI and 5G companies in constrained areas on the fringe of telco networks.

- ASUS’s MGX server, the ESC NM2N-E1, slides right into a rack that holds as much as 32 GH200 processors and helps air- and water-cooled nodes.

- Foxconn gives a set of MGX programs, together with a 4U mannequin that accommodates as much as eight NVIDIA H100 NVL PCIe Tensor Core GPUs.

- GIGABYTE’s XH23-VG0-MGX can accommodate loads of storage in its six 2.5-inch Gen5 NVMe hot-swappable bays and two M.2 slots.

- Inventec’s programs can slot into 19- and 21-inch racks and use three completely different implementations of liquid cooling.

- Lenovo provides a variety of 1U, 2U and 4U MGX servers, together with fashions that assist direct liquid cooling.

- Pegatron’s air-cooled AS201-1N0 server packs a BlueField-3 DPU for software-defined, hardware-accelerated networking.

- QCT can stack 16 of its QuantaGrid D74S-IU programs, every with two GH200 Superchips, right into a single QCT QoolRack.

- Supermicro’s ARS-111GL-NHR with 9 hot-swappable followers is a part of a portfolio of air- and liquid-cooled GH200 and NVIDIA Grace CPU programs.

- Wiwynn’s SV7200H, a 1U twin GH200 system, helps a BlueField-3 DPU and a liquid-cooling subsystem that may be remotely managed.

- Wistron’s MGX servers are 4U GPU programs for AI inference and blended workloads, supporting as much as eight accelerators in a single system.

The brand new servers are along with three accelerated programs utilizing MGX introduced at COMPUTEX final Could — Supermicro’s ARS-221GL-NR utilizing the Grace CPU and QCT’s QuantaGrid S74G-2U and S74GM-2U powered by the GH200.

Grace Hopper Packs Two in One

System builders are adopting the hybrid processor as a result of it packs a punch.

GH200 Superchips mix a high-performance, power-efficient Grace CPU with a muscular NVIDIA H100 GPU. They share a whole bunch of gigabytes of reminiscence over a quick NVIDIA NVLink-C2C interconnect.

The result’s a processor and reminiscence complicated well-suited to tackle at this time’s most demanding jobs, resembling operating massive language fashions. They’ve the reminiscence and pace wanted to hyperlink generative AI fashions to information sources that may enhance their accuracy utilizing retrieval-augmented era, aka RAG.

Recommenders Run 4x Sooner

As well as, the GH200 Superchip delivers higher effectivity and as much as 4x extra efficiency than utilizing the H100 GPU with conventional CPUs for duties like making suggestions for on-line buying or media streaming.

In its debut on the MLPerf trade benchmarks final November, GH200 programs ran all information middle inference assessments, extending the already main efficiency of H100 GPUs.

In all these methods, GH200 programs are taking to new heights a computing revolution their namesake helped begin on the primary mainframe computer systems greater than seven a long time in the past.

Register for NVIDIA GTC, the convention for the period of AI, operating March 18-21 on the San Jose Conference Heart and just about.

And get the 30,000-foot view from NVIDIA CEO and founder Jensen Huang in his GTC keynote.

[ad_2]