[ad_1]

Generative AI is the newest flip within the fast-changing digital panorama. One of many groundbreaking improvements making it attainable is a comparatively new time period: SuperNIC.

What Is a SuperNIC?

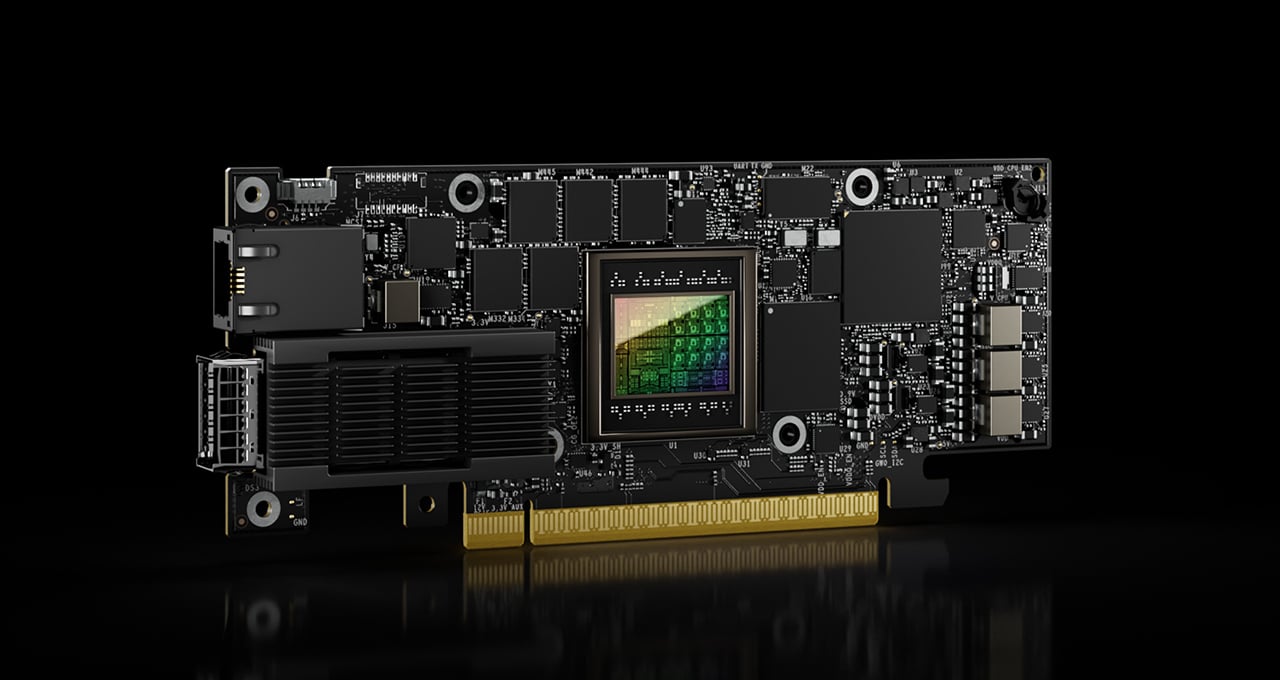

SuperNIC is a brand new class of community accelerators designed to supercharge hyperscale AI workloads in Ethernet-based clouds. It supplies lightning-fast community connectivity for GPU-to-GPU communication, attaining speeds reaching 400Gb/s utilizing distant direct reminiscence entry (RDMA) over converged Ethernet (RoCE) know-how.

SuperNICs mix the next distinctive attributes:

- Excessive-speed packet reordering to make sure that information packets are acquired and processed in the identical order they have been initially transmitted. This maintains the sequential integrity of the info circulate.

- Superior congestion management utilizing real-time telemetry information and network-aware algorithms to handle and forestall congestion in AI networks.

- Programmable compute on the enter/output (I/O) path to allow customization and extensibility of community infrastructure in AI cloud information facilities.

- Energy-efficient, low-profile design to effectively accommodate AI workloads inside constrained energy budgets.

- Full-stack AI optimization, together with compute, networking, storage, system software program, communication libraries and software frameworks.

NVIDIA lately unveiled the world’s first SuperNIC tailor-made for AI computing, primarily based on the BlueField-3 networking platform. It’s part of the NVIDIA Spectrum-X platform, the place it integrates seamlessly with the Spectrum-4 Ethernet swap system.

Collectively, the NVIDIA BlueField-3 SuperNIC and Spectrum-4 swap system type the muse of an accelerated computing material particularly designed to optimize AI workloads. Spectrum-X constantly delivers excessive community effectivity ranges, outperforming conventional Ethernet environments.

“In a world the place AI is driving the following wave of technological innovation, the BlueField-3 SuperNIC is a crucial cog within the equipment,” stated Yael Shenhav, vice chairman of DPU and NIC merchandise at NVIDIA. “SuperNICs be certain that your AI workloads are executed with effectivity and velocity, making them foundational elements for enabling the way forward for AI computing.”

The Evolving Panorama of AI and Networking

The AI discipline is present process a seismic shift, due to the appearance of generative AI and massive language fashions. These highly effective applied sciences have unlocked new prospects, enabling computer systems to deal with new duties.

AI success depends closely on GPU-accelerated computing to course of mountains of knowledge, prepare massive AI fashions, and allow real-time inference. This new compute energy has opened new prospects, however it has additionally challenged Ethernet cloud networks.

Conventional Ethernet, the know-how that underpins web infrastructure, was conceived to supply broad compatibility and join loosely coupled purposes. It wasn’t designed to deal with the demanding computational wants of contemporary AI workloads, which contain tightly coupled parallel processing, speedy information transfers and distinctive communication patterns — all of which demand optimized community connectivity.

Foundational community interface playing cards (NICs) have been designed for general-purpose computing, common information transmission and interoperability. They have been by no means designed to deal with the distinctive challenges posed by the computational depth of AI workloads.

Commonplace NICs lack the requisite options and capabilities for environment friendly information switch, low latency and the deterministic efficiency essential for AI duties. SuperNICs, however, are purpose-built for contemporary AI workloads.

SuperNIC Benefits in AI Computing Environments

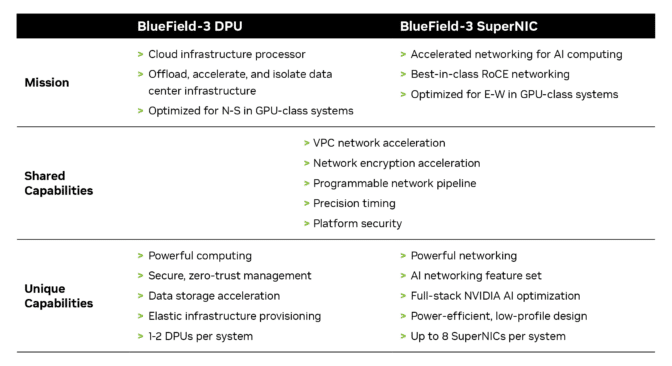

Information processing models (DPUs) ship a wealth of superior options, providing excessive throughput, low-latency community connectivity and extra. Since their introduction in 2020, DPUs have gained reputation within the realm of cloud computing, primarily as a result of their capability to dump, speed up and isolate information middle infrastructure processing.

Though DPUs and SuperNICs share a variety of options and capabilities, SuperNICs are uniquely optimized for accelerating networks for AI. The chart beneath reveals how they evaluate:

Distributed AI coaching and inference communication flows rely closely on community bandwidth availability for achievement. SuperNICs, distinguished by their smooth design, scale extra successfully than DPUs, delivering a formidable 400Gb/s of community bandwidth per GPU.

The 1:1 ratio between GPUs and SuperNICs inside a system can considerably improve AI workload effectivity, resulting in better productiveness and superior outcomes for enterprises.

The only real goal of SuperNICs is to speed up networking for AI cloud computing. Consequently, it achieves this aim utilizing much less computing energy than a DPU, which requires substantial computational assets to dump purposes from a bunch CPU.

The diminished computing necessities additionally translate to decrease energy consumption, which is very essential in techniques containing as much as eight SuperNICs.

Extra distinguishing options of the SuperNIC embrace its devoted AI networking capabilities. When tightly built-in with an AI-optimized NVIDIA Spectrum-4 swap, it presents adaptive routing, out-of-order packet dealing with and optimized congestion management. These superior options are instrumental in accelerating Ethernet AI cloud environments.

Revolutionizing AI Cloud Computing

The NVIDIA BlueField-3 SuperNIC presents a number of advantages that make it key for AI-ready infrastructure:

- Peak AI workload effectivity: The BlueField-3 SuperNIC is purpose-built for network-intensive, massively parallel computing, making it supreme for AI workloads. It ensures that AI duties run effectively — with out bottlenecks.

- Constant and predictable efficiency: In multi-tenant information facilities the place quite a few duties are processed concurrently, the BlueField-3 SuperNIC ensures that every job and tenant’s efficiency is remoted, predictable and unaffected by different community actions.

- Safe multi-tenant cloud infrastructure: Safety is a prime precedence, particularly in information facilities dealing with delicate data. The BlueField-3 SuperNIC maintains excessive safety ranges, enabling a number of tenants to coexist whereas maintaining information and processing remoted.

- Extensible community infrastructure: The BlueField-3 SuperNIC isn’t restricted in scope — it’s extremely versatile and adaptable to a myriad of different community infrastructure wants.

- Broad server producer help: The BlueField-3 SuperNIC matches seamlessly into most enterprise-class servers with out extreme energy consumption in information facilities.

Study extra about NVIDIA BlueField-3 SuperNICs, together with how they combine throughout NVIDIA’s information middle platforms, within the whitepaper: Subsequent-Technology Networking for the Subsequent Wave of AI.

[ad_2]